Concept

the first part is same as Steepest Descent Method, but after find the new , we calculate next instead of finding steepest descent by

and

x0 = initial guess

g0 = \nabla f(x0)

s0 = g0

for k = 0, 1, 2,...

Choose αk to minimize f(xk + αk sk)

xk+1 = xk + αk sk

gk+1 = \nabla f(xk+1)

βk+1 = (g^Tk+1 gk+1)/(g^Tk gk)

sk+1 = gk+1 + βk+1sk

end

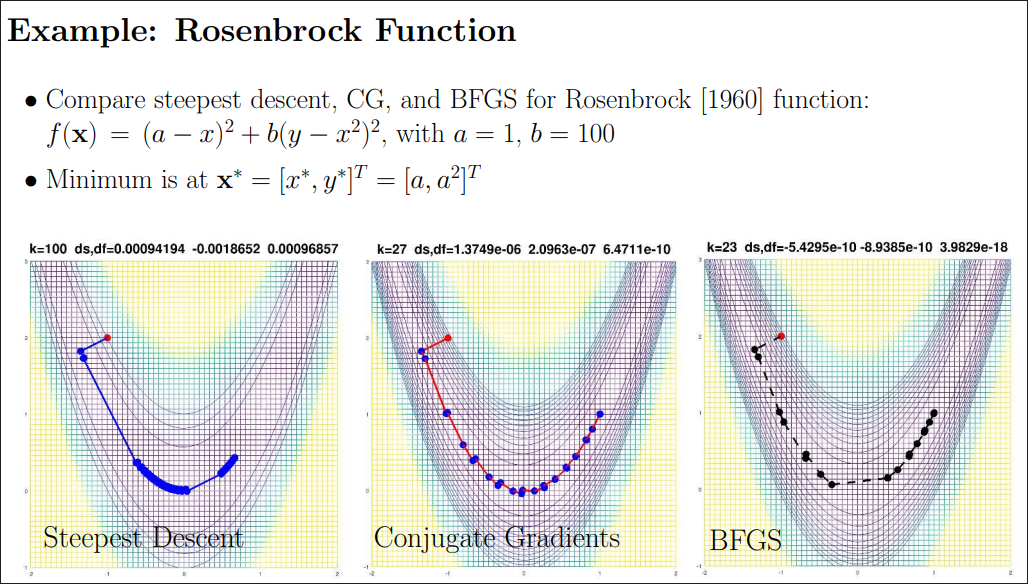

Conjugate Gradient Method is only different with Steepest Descent Method with , if we set constantly equal to , it will be same as Steepest Descent Method.

Cost

unlike Newton’s method, this one doesn’t need to calculate Hessian matrix nor , it only need to calculate gradient and line search.

Code

import numpy as np

from typing import Callable, Tuple

def backtracking_line_search(

f: Callable[[np.ndarray], float],

grad_f: Callable[[np.ndarray], np.ndarray],

x: np.ndarray,

d: np.ndarray, # direction, usually - \nabla grad_f

alpha_init: float = 1.0,

rho: float = 0.5, # back trace factor

c: float = 1e-4 # Armijo cond

) -> float:

alpha = alpha_init

f_x = f(x)

grad_dot_d = np.dot(grad_f(x), d) # \nabla f(x)^Td

# Armijo cond: f(x + α*d) \leqd f(x) + c*α* \nabla f(x)^Td

while f(x + alpha * d) > f_x + c * alpha * grad_dot_d:

alpha *= rho # backtrace

return alpha

def conjugate_gradient(

f: Callable[[np.ndarray], float],

grad_f: Callable[[np.ndarray], np.ndarray],

x0: np.ndarray,

max_iter: int = 1000,

tol: float = 1e-6

) -> Tuple[np.ndarray, float, int]:

x = x0.copy()

n = len(x)

g = grad_f(x)

d = -g.copy()

iterations = 0

for i in range(max_iter):

if np.linalg.norm(g) < tol:

break

alpha = backtracking_line_search(f, grad_f, x, d)

x_new = x + alpha * d

g_new = grad_f(x_new)

beta = np.dot(g_new, g_new) / np.dot(g, g)

d = -g_new + beta * d

x = x_new

g = g_new

iterations += 1

# reset direction

if (i + 1) % n == 0:

d = -g.copy()

return x, f(x), iterations